I manage or monitor a few servers, and it’s a good idea to keep an eye on how they’re holding up. The Linux `uptime` command is all I need – show me how long the server’s been up (or if it’s cycled recently – power hiccup?), and CPU load averages.

I just whipped up a dead-simple solution to let me embed the uptime reports from the servers into my retro homepage.

I’m quite sure there are better and/or more robust ways to do this, but this is what I came up with after maybe 2 minutes of thought.

On each of the servers, I added a shell script called “`uptimewriter.sh`” (in my `~/bin` directory, so located at `~/bin/uptimewriter.sh`). I made the file executable (`chmod +x ~/bin/uptimewriter.sh`) and used this script:

#!/bin/sh

echo “document.write(‘”`uptime`”‘);”

All it does is wrap the output of the `uptime` command in some javascript code to display the text when embedded on a web page. I then added it to the crontab on each of the servers, running every 15 minutes, and dumping the output into a file that will be visible via the webserver.

*/15 * * * * ~/bin/uptimewriter.sh > ~/public_html/uptime.js 2>&1

Every 15 minutes, the `uptimewriter.sh` script is run, and output into a javascript file that can be pulled to display on a web page.

Then, on my retro home page, I added code to run the javascript file:

When called from a web page, that will render the output of the `uptime` command, wrapped in a `document.write()` call as per the `uptimewriter.sh` script, displaying it nicely:

I can do some more work to style it a bit, so it wraps more nicely, but it’s a decent start.

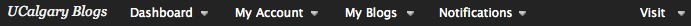

On UCalgaryBlogs, I’d modified the adminbar to include a link to the current site’s dashboard if a person was logged in, making it easy to get to the members-only side of WordPress without having to go through My Blogs and finding the right blog, then mousing over the pop-out “Dashboard” link. Most people never found that, and it’s not very intuitive.

So, I hacked in a hard-coded link to Dashboard in bp-core-adminbar.php. This worked, but meant I had to remember to re-hack the file after running a BuddyPress update. I forgot to do that right after I ran the last upgrade, and got emails from users asking WTF?

I decided to figure out the best way to add in the Dashboard link without hacking the actual plugin files. Turns out, it’s drop-dead simple. Yay, WordPress.

In your /wp-content/plugins/ directory, create a file called bp-custom.php (if it’s not there already), and drop this code into it:

<?php

// custom functions for tweaking BuddyPress

function custom_adminbar_dashboard_button() {

// adds a "Dashboard" link to the BuddyPress admin bar if a user is logged in.

if (is_user_logged_in()) {

echo '<li><a href="/wp-admin/">Dashboard</a></li>';

}

}

add_action('bp_adminbar_menus', 'custom_adminbar_dashboard_button', 1);

?>

When in place, your BuddyPress adminbar will look something like this:

Yes, I know I should do something to properly detect user levels and privileges, rather than just providing the Dashboard link all willie-nillie to anyone that’s logged in, but the link itself just provides access to whatever Dashboard features the user is allowed to see, so there’s no security risk. Better to just say that a user can see the Dashboard for any site they’re logged into, and let WordPress deal with restricting access properly.

I should also deal with the possibility of WPMU being configured as a subdirectory vs. subdomain (the /wp-admin/ link will bork if you’re using subdirectories – better to use the real code to sniff out the base url of the current site…)

I’ve been having a heck of a time battling sploggers at UCalgaryBlogs.ca – roaches that create accounts and blogs so they can foist their spam links to game Google (thanks for providing spammers with such a powerful incentive, Google).

I’ve been having a heck of a time battling sploggers at UCalgaryBlogs.ca – roaches that create accounts and blogs so they can foist their spam links to game Google (thanks for providing spammers with such a powerful incentive, Google).

There’s an option in WordPress Multiuser to ban email domains – provide the domains, one per line, into a text box, and it will reject any roaches trying to create accounts from those domains.

The biggest offenders have been myspace.info and myspacee.info – and although they’ve been in my Banned Email Domains list for months, they just keep getting through. I figured there was some exploit they were using, but couldn’t find a thing.

So, today, I took a look through the code of WPMU 2.8.4, to see if I could find what was going on. Turns out, it’s a really simple fix. There’s a function in wp-includes/wpmu-functions.php, called is_email_address_unsafe() – it’s supposed to check the contents of the Banned Email Domains option field, and reject addresses from the flagged domains.

Except it wasn’t. Rejecting, I mean. It was letting everyone through, because of a simple bug in the code. It was written to treat the value of the option as an array and to directly walk through each item of the array. But, the option is stored as a string, so it needs to be converted to an array first. Easy peasy. Here’s my updated is_email_address_unsafe() function, which goes around line 880 of wpmu-functions.php:

function is_email_address_unsafe( $user_email ) {

$banned_names_text = get_site_option( "banned_email_domains" ); // grab the string first

$banned_names = explode("\n", $banned_names_text); // convert the raw text string to an array with an item per line

if ( is_array( $banned_names ) && empty( $banned_names ) == false ) {

$email_domain = strtolower( substr( $user_email, 1 + strpos( $user_email, '@' ) ) );

foreach( (array) $banned_names as $banned_domain ) {

if( $banned_domain == '' )

continue;

if (

strstr( $email_domain, $banned_domain ) ||

(

strstr( $banned_domain, '/' ) &&

preg_match( $banned_domain, $email_domain )

)

)

return true;

}

}

return false;

}

The fix is in the first 2 lines of the function – getting the value of the string, and then exploding that into the array which is then used by the rest of the function. I’ve tested the updated function out on UCalgaryBlogs.ca and it seems to work just fine. Hopefully the fix will get pulled into the next update of WPMU so everyone with Banned Email Domains can breathe a bit more easily.

I’m working with a class of 250+ geology undergrads, split up into 53 groups. They’re using a WordPress site to publish online presentations as the product of a semester-long group project. I’m using the great WP-Sentry plugin to let them collaboratively author the pages without worrying about other students in the class being able to edit their work (I know – but it makes them more comfortable so it’s a good thing to add).

I’m working with a class of 250+ geology undergrads, split up into 53 groups. They’re using a WordPress site to publish online presentations as the product of a semester-long group project. I’m using the great WP-Sentry plugin to let them collaboratively author the pages without worrying about other students in the class being able to edit their work (I know – but it makes them more comfortable so it’s a good thing to add).

The premise is this – I created a Page called, creatively enough, “Winter 2009” – and each of the groups is to create a page (or set of pages) and add them to the site – and selecting “Winter 2009” as the parent page for the main page of their presentation. They are free to create as many other pages as they like, and can set those to use their first page as the parent, thereby generating a table of contents.

Works great. Except that the WP-Sentry plugin hijacks the “Private” state of pages, and the tree of Pages available in the Parent selector is based on “Published” pages.

Conflict. Confusion. Frustration.

The students could either collaborate on the pages, or organize them in the tree structure.

Of course they could create the pages and add them to the tree structure and THEN enable the WP-Sentry-managed group editing controls, but YOU try explaining that process to 250 undergrads, all stressed out about building web pages as part of a geology course.

So… I dug into the code to see what was yanking “Private” pages from the Parent list. Turns out, it’s in wp-includes/post.php, waaaay down on line 2618 (as of WPMU 2.7). All I did was remove the " AND post_status = 'publish'" bit, and it now appears to be listing all pages.

I’m quite sure I borked something else, but for now I’m leaving the Parent list wide open until the students are done publishing their presentations.

Update: Unintended consequence #242: Looks like with the tweak, Private pages show up where they’re not expected. I’m disabling the tweak for now until I can find a better way (if that’s even possible).

I just tried logging into ucalgaryblogs.ca using a test user account, and was surprised to see a strange item in the admin bar at the top of the page:

I was curious, so I clicked it.

mwah? Those are site-admin items, being displayed to a non-admin user. I was actually able to click the “Admin Message” item to set that, even though the logged in user wasn’t an admin. Scary. Luckily, nobody’s noticed the extra menu yet – or if they have, they’ve behaved.

I poked around in the wordpress-admin-bar.php file to see if I could plug the hole. I have no idea if this is the right way, but I’ve added this bit:

} else {

if ($menu[0]['title'] === null) continue; // this is the line I added

echo ' <li class="wpabar-menu_';

if ( TRUE === $menu[0]['custom'] ) echo 'admin-php_';

It’s down around line 320 or so. It’s probably not the correct or most reliable way to strip that menu from non-admin-users’ version of the admin bar, but it worked. Here’s the result:

Has anyone else seen the extra menu? Could it have just been a freak thing only on my WPMU install, or is this a wide open potential security problem in the shipping wordpress-admin-bar.php file? It was written for non-WPMU WordPress, so it’s quite possible it just doesn’t grok the different types of users in WPMU.

A few times on Twitter, I’ve mentioned how “easy” it is to move stuff between servers using the rsync shell command. It’s actually an extremely powerful program for synchronizing two directories – even if they’re not on the same volume, or even on the same computer. To do this, you’ll need to login to one of the servers via SSH. Once there, invoke the geeky incantation:

rsync -rtlzv --ignore-errors -e ssh . username@hostname:/path/to/directory

What that basically says is, “run rsync, and tell it to recursively copy all directories, preserving file creation and modification times, maintaining proper symlinks (for aliases and stuff like that), compress the files as they’re being copied in order to save bandwidth, and provide verbose updates as you’re doing it. Use SSH as the protocol, to securely transfer stuff from the current directory to the server ‘hostname‘ using the username ‘username‘. On that destination server, stuff the files and directories into ‘/path/to/directory‘”

What you’ll need to change:

. – if you want to specify a full path to the source directory, put it in place of the . (which means “here” in shellspeak)username – unless your username on the destination server is “username” – you’ll be prompted for the password for that account after hitting return and starting the program working.hostname – the IP address or domain name of the server you want to send the files to./path/to/directory – I’m always a bit fuzzy on this. Can it be a relative path? from where? So I just specify the full path to where I want the files to go. Something like /home/dnorman/

Because it compresses files, it’s actually pretty efficient at moving a metric boatload of stuff between servers. I’ve used this technique to easily migrate from Dreamhost to CanadianWebHosting.com and I use it regularly to move files around on campus. I use a variation of this technique to regularly backup servers as well – the beauty of rsync is that it only copies files that have been added or modified, so backing up a few gigs worth of server really only involves transferring a few megabytes of files, and can be done routinely in a matter of minutes or seconds.

We use the Events module to manage workshops here in the Teaching & Learning Centre, and use the “Upcoming Events” block to display the next few workshops on our website. Works great, but the default text leaves a bit to be desired. By default, it shows the event title, and “(2 days)” – which indicates that the event begins in 2 days.

But, it could also mean that the event lasts for 2 days.

So, I just added a trivial change to the event.module file, adding the following line of code at line 1847 (on my copy of the file, which was checked out on June 4, 2007):

$timeleft = 'starts in ' . $timeleft;

That changes the text indicator in the “Upcoming Events” block to read:

(starts in 2 days)

Which is much clearer in meaning. Easy peasy. I just have to remember to edit the module after updating, if this doesn’t make it in…

After realizing that the sympal_scripts were silently failing to properly call cron.php on sites served from subdirectories on a shared Drupal multisite instance, I rolled up my sleeves to build a script that actually worked. What I’ve come up with works, but is likely not the cleanest or most efficient way of doing things. But it works. Which is better than the solution I had earlier today.

I also took the chance to get more familiar with Ruby. I could have come up with a shell script solution, but I wanted the flexibility to more easily extend the script as needed. And I wanted the chance to play with Ruby in a non-Hello-World scenario.

Here’s the code:

#!/usr/local/bin/ruby

# Drupal multisite hosting auto cron.php runner

# Initial draft version by D'Arcy Norman dnorman@darcynorman.net

# URL goes here

# Idea and some code from a handy page by (some unidentified guy) at http://whytheluckystiff.net/articles/wearingRubySlippersToWork.html

require 'net/http'

# this script assumes that $base_url has been properly set in each site's settings.php file.

# further, it assumes that it is at the START of a line, with spacing as follows:

# $base_url = 'http://mywonderfuldrupalserver.com/site';

# also further, it assumes there is no comment before nor after the content of that line.

# customize this variable to point to your Drupal directory

drupalsitesdir = '/usr/www/drupal' # no trailing slash

Dir[drupalsitesdir + '/sites/**/*.php'].each do |path|

File.open(path) do |f|

f.grep( /^\$base_url = / ) do |line|

line = line.strip();

baseurl = line.gsub('$base_url = \'', '')

baseurl = baseurl.gsub('\';', '')

baseurl = baseurl.gsub(' // NO trailing slash!', '')

if !baseurl.empty?

cronurl = baseurl + "/cron.php"

puts cronurl

if !cronurl.empty?

url = URI.parse(cronurl)

req = Net::HTTP::Get.new(url.path)

res = Net::HTTP.start(url.host, url.port) {|http|http.request(req)}

puts res.body

end

end

end

end

end

No warranty, no guarantee. It works on my servers, and on my PowerBook.

Some caveats:

- It requires a version of Ruby more recent than what ships on MacOSX 10.3 server. Easy enough to update, following the Ruby on Rails installation instructions.

- It requires

$base_url to be set in the settings.php file for each site you want to run cron.php on automatically.

- It requires one trivial edit to the script, telling it where Drupal lives on your machine. I might take a look at parameterizing this so it could be run more flexibily.

- It requires cron (or something similar) to trigger the script on a regular basis.

After realizing that the sympal_scripts were silently failing to properly call cron.php on sites served from subdirectories on a shared Drupal multisite instance, I rolled up my sleeves to build a script that actually worked. What I’ve come up with works, but is likely not the cleanest or most efficient way of doing things. But it works. Which is better than the solution I had earlier today.

I also took the chance to get more familiar with Ruby. I could have come up with a shell script solution, but I wanted the flexibility to more easily extend the script as needed. And I wanted the chance to play with Ruby in a non-Hello-World scenario.

Here’s the code:

#!/usr/local/bin/ruby

# Drupal multisite hosting auto cron.php runner

# Initial draft version by D'Arcy Norman dnorman@darcynorman.net

# URL goes here

# Idea and some code from a handy page by (some unidentified guy) at http://whytheluckystiff.net/articles/wearingRubySlippersToWork.html

require 'net/http'

# this script assumes that $base_url has been properly set in each site's settings.php file.

# further, it assumes that it is at the START of a line, with spacing as follows:

# $base_url = 'http://mywonderfuldrupalserver.com/site';

# also further, it assumes there is no comment before nor after the content of that line.

# customize this variable to point to your Drupal directory

drupalsitesdir = '/usr/www/drupal' # no trailing slash

Dir[drupalsitesdir + '/sites/**/*.php'].each do |path|

File.open(path) do |f|

f.grep( /^\$base_url = / ) do |line|

line = line.strip();

baseurl = line.gsub('$base_url = \'', '')

baseurl = baseurl.gsub('\';', '')

baseurl = baseurl.gsub(' // NO trailing slash!', '')

if !baseurl.empty?

cronurl = baseurl + "/cron.php"

puts cronurl

if !cronurl.empty?

url = URI.parse(cronurl)

req = Net::HTTP::Get.new(url.path)

res = Net::HTTP.start(url.host, url.port) {|http|http.request(req)}

puts res.body

end

end

end

end

end

No warranty, no guarantee. It works on my servers, and on my PowerBook.

Some caveats:

- It requires a version of Ruby more recent than what ships on MacOSX 10.3 server. Easy enough to update, following the Ruby on Rails installation instructions.

- It requires

$base_url to be set in the settings.php file for each site you want to run cron.php on automatically.

- It requires one trivial edit to the script, telling it where Drupal lives on your machine. I might take a look at parameterizing this so it could be run more flexibily.

- It requires cron (or something similar) to trigger the script on a regular basis.

Update: I added a cleaned up copy of my script, in case it comes in handy for anyone. Read the end of this post for more info…

I just finished whipping up a workable bash script to automate installing and (basically) configuring a new site on a shared Drupal hosting server.

Here’s the basic scenario. First, you set up a “template” site, and configure it however you want all new sites to start. Add common accounts. Enable modules. Twiddle bits. Etc… Then, you export a mysqldump of that template site’s database, and will use that to create new sites.

Now, whenever you want a new site, you just run this bash script and give the short site name as a parameter on the command line. It will create a symbolic link from the shared Drupal codebase to a location within the web directory, then create a new database and populate it using the freeze dried mysqldump. It will then make sure that the files directory in the new site’s “site” directory is writable, and insert some settings into settings.php to tell it to use the proper database and file_directory_path.

Initial tests show it’s working like a charm. I can now set up a new site in roughly 10 seconds. Very cool.

After I’ve cleaned up the script some more, and tested it a bit more (i.e., on a machine other than my Powerbook), I’ll post it online somewhere. It’s really a pretty simple script, now that it’s working. Including comments and liberal whitespace, it’s a whopping 52 lines of code (18 lines that actually do stuff).

I’ll also be doing some more playing around with the shiny new Drupal installer (part of the CVS branch of Drupal now) to see if that might be a better long term solution. Not sure how it handles tweaked settings. It handles enabling modules and themes, though…

Update: I’ve cleaned up the script, done some more testing, and it seems to work fine. So, here it is in case it comes in handy for anyone.

To make the automatic configuration of settings.php work, you need to modify your “template” settings.php to have “database_name_goes_here” where the database name goes (line 87 in my copy), and you can also have it set the files directory for the site if so desired, by editing the variable override array at the end of the file. Mine looks like this:

$conf = array(

'file_directory_path' => 'sites/file_directory_path_goes_here/files',

'clean_url' => '1'

);

Update: I added a cleaned up copy of my script, in case it comes in handy for anyone. Read the end of this post for more info…

I just finished whipping up a workable bash script to automate installing and (basically) configuring a new site on a shared Drupal hosting server.

Here’s the basic scenario. First, you set up a “template” site, and configure it however you want all new sites to start. Add common accounts. Enable modules. Twiddle bits. Etc… Then, you export a mysqldump of that template site’s database, and will use that to create new sites.

Now, whenever you want a new site, you just run this bash script and give the short site name as a parameter on the command line. It will create a symbolic link from the shared Drupal codebase to a location within the web directory, then create a new database and populate it using the freeze dried mysqldump. It will then make sure that the files directory in the new site’s “site” directory is writable, and insert some settings into settings.php to tell it to use the proper database and file_directory_path.

Initial tests show it’s working like a charm. I can now set up a new site in roughly 10 seconds. Very cool.

After I’ve cleaned up the script some more, and tested it a bit more (i.e., on a machine other than my Powerbook), I’ll post it online somewhere. It’s really a pretty simple script, now that it’s working. Including comments and liberal whitespace, it’s a whopping 52 lines of code (18 lines that actually do stuff).

I’ll also be doing some more playing around with the shiny new Drupal installer (part of the CVS branch of Drupal now) to see if that might be a better long term solution. Not sure how it handles tweaked settings. It handles enabling modules and themes, though…

Update: I’ve cleaned up the script, done some more testing, and it seems to work fine. So, here it is in case it comes in handy for anyone.

To make the automatic configuration of settings.php work, you need to modify your “template” settings.php to have “database_name_goes_here” where the database name goes (line 87 in my copy), and you can also have it set the files directory for the site if so desired, by editing the variable override array at the end of the file. Mine looks like this:

$conf = array(

'file_directory_path' => 'sites/file_directory_path_goes_here/files',

'clean_url' => '1'

);

We’re using Subversion to manage files for all of our projects in the Teaching & Learning Centre. More projects means more Subversion repositories to backup. Instead of maintaining a list of projects and repositories, we stick all repositories in a common root directory, and I’ve just put together a dead simple script to automatically dump all of them to a directory of my choosing. I’ve added this script to the crontab for the www user on the server, and it runs svndump on all repositories, gzipping the output for archive (and possibly restore).

The output is stored in a specified backup directory, which is then picked up via rsync from my desktop Mac, and copied to the external backup drive.

#!/bin/sh

SVN_REPOSITORIES_ROOT_DIR="/svn_repositories/"

BACKUP_DIRECTORY="/Users/Shared/backup/svn/"

for REPOSITORY in `ls -1 $SVN_REPOSITORIES_ROOT_DIR`

do

echo 'dumping repository: ' $REPOSITORY

/usr/local/bin/svnadmin dump $SVN_REPOSITORIES_ROOT_DIR$REPOSITORY | gzip > $BACKUP_DIRECTORY$REPOSITORY'.gz'

done

We’re using Subversion to manage files for all of our projects in the Teaching & Learning Centre. More projects means more Subversion repositories to backup. Instead of maintaining a list of projects and repositories, we stick all repositories in a common root directory, and I’ve just put together a dead simple script to automatically dump all of them to a directory of my choosing. I’ve added this script to the crontab for the www user on the server, and it runs svndump on all repositories, gzipping the output for archive (and possibly restore).

The output is stored in a specified backup directory, which is then picked up via rsync from my desktop Mac, and copied to the external backup drive.

#!/bin/sh

SVN_REPOSITORIES_ROOT_DIR="/svn_repositories/"

BACKUP_DIRECTORY="/Users/Shared/backup/svn/"

for REPOSITORY in `ls -1 $SVN_REPOSITORIES_ROOT_DIR`

do

echo 'dumping repository: ' $REPOSITORY

/usr/local/bin/svnadmin dump $SVN_REPOSITORIES_ROOT_DIR$REPOSITORY | gzip > $BACKUP_DIRECTORY$REPOSITORY'.gz'

done