Since we’re adopting Desire2Learn, the UofC sent a few folks to the annual Desire2Learn Fusion conference – the timing was extremely fortuitous, with the conference starting about a month after we signed the contract. I’d never been to a D2L conference before, so wasn’t sure really what to expect. Looking at the conference schedule ahead of time, it looked pretty interesting – and would have many sessions that promised to cover some of the extremely-rapid-deployment adoption cycle we’re faced with.

The short version of the conference is that I’m quite confident that we’ll have the technology in place by the time our users need it. But, I’m a little freaked out about our ability to train instructors before they need to start using D2L. We’ve got some plans to mitigate this, but this is the Achilles Heel of our implementation plan. If we can’t get our instructors trained… DOOM! etc…

The sessions at the conference were really good. I’d expected a vendor conference to focus on the shiny bits of the vendor’s tools, and on the magical wondrousness of clicking checkboxes and deploying software to make unicorns dance with pandas, etc… But it really wasn’t about the vendor’s product – for many of the sessions (excepteven the rapid-deployment sessions that I went to), you could easily remove Desire2Learn’s products from the presentation and it would still have been an interesting and useful session. One of our team members who went to a session on online discussions commented something along the lines of “I was thinking I’d see how to set up the D2L discussion boards in a course – but they didn’t even talk about D2L! It was all pedagogy and learning theory…” The conference as a whole had extremely high production value. Surprisingly high. Whoever was responsible for the great custom-printed-conference-schedule lanyard badge deserves a raise or two.

This was also one of the most exhausting conferences I’ve been to. It’s the first one in a long time where I had to really be present, attend all sessions, and pay attention. We’re under just a little pressure to get this deployment done right, and there’s no time to screw up and backtrack. So, pay attention.

The sessions I chose largely share a theme. We’re looking at migrating from Bb8 to D2L starting now, and wrapping up with 100% adoption by May 2014. So, my session selection and note-taking focus was largely driven by the panic of facing 31,000 FTE students, a few thousand instructors, 14 deans, a Provost, a Vice Provost, and a CIO (when we get a new one) and being able to say “hey. we’re on it. we can do this.”

I’m not going to blab about the social events (which were awesome), or about how nice (but ungodly hot) Boston was (it was very nice). I’ve posted photos.

Here’s abridged highlights from my session notes:

Administration pre-conference

Kerry O’Brien

Learned a bunch about the Org Unit model in D2L – and something clicked when thinking about “Course Templates” – I’d been thinking Templates were course design templates. No. They’re course offering templates, used for grouping course offerings based on an organizational hierarchy. So, if you offer a course like Math 251, there’s a Course Template called “Math 251” and all offerings (instances of a course with students actually taking the course) are just Course Offerings that use the “Math 251” template. So, a course like “Math 251 – Fall 2013” is Course Offering of “Math 251” (and also belongs to the “Fall 2013” semester org unit, and likely has Sections enabled so that Math 251 L01-L10 are contained within the single Course Offering. Sounds complicated, but once it clicked, I realized it should help to keep things nicely organized.

Also, the distinction between Sections and Groups was useful – Sections are defined by the Registrar – they’re official subdivisions of a Course Offering, and will be pulled from our PeopleSoft SIS integration – while Groups are informal ad hoc subdivisions that are created by the instructor(s) to put students into smaller bunches to work on stuff together.

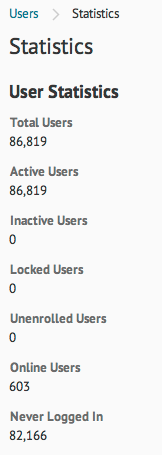

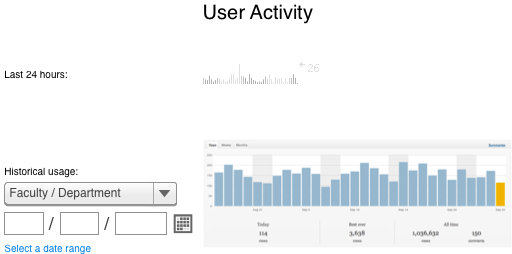

Analytics/Insights

Al Essa

I’ve seen Al online, but this was my first time seeing him in person. He’s a really interesting presenter, and made me overcome some of my resistance to analytics and enterprise data analysis. I’m still kind of pissed off at what the Gates Foundation is doing to education, but there’s something interesting going on with analytics, if we’re careful about how we interpret the data, and what we do with it.

One thing I’m really interested in exploring with the Analytics/Insights package is the modelling of curriculum and learning outcomes within a course, department, faculty, and across campus. This has some really interesting implications for the development and refinement of courses to make sure students are given the full range of learning experiences that will help them succeed in their program(s).

Navigation & Themes

Lindsay – Calgary Catholic School District

As an aside, one of the big reasons we went with D2L was because the CCSD and CBE (and SAIT, and Bow Valley College, and a bunch of other institutions in Calgary) use it, so we’ll have much more opportunity for collaboration and sharing of resources.

One thing we’ll have to figure out is what the default course layout and permissions should be – what tools/content should be presented by default, and what will instructors be able to change? I’d been thinking we should just hand the keys over to the instructors, and let them change everything if they want. But, we may need to think more about how to set up the default layout, and which bits need to adjusted by instructors. This comes down to maturity level of the users, and of the organization, and on the level of training we’ve been able to provide by the time they get there… I’ll also be looking to keep things as simple as possible, while providing access to deeper tools for advanced users. Interesting tension there…

Quick & Dirty Implementation

Michel Singh, La Cite Collegial

Michel (and team) migrated about 5,000 FTE students from Blackboard CE to D2L. Signed a contract in May 2012, and launched on September 1 2012. The technology portion of the implementation and migration worked great. But training of instructors was the big risk and weak spot (sounds familiar…).

They did a “cold turkey” migration – everyone jumped into the pool together.

Some lessons from Michel:

- Have a plan and choose your battles

- Manage expectations (admin and faculty)

- Share responsibilities among your group

- Have empathy for users

- Structure your support team

- Leverage video and tutorials

- Celebrate small successes

- Maintain good communication with D2L

They used Course Templates in an interesting way – although course content isn’t automatically copied from Templates to Offerings, they used Templates as a central place for the curriculum review team to build the course and add resources, which could then be manually copied down into the Offering for use with students. Nice separation, while allowing collaboration on course design.

Victoria University D2L Implementation

Lisa Germany

They took on a wider task – refreshing the campus eLearning environment – which included migrating from WebCT to Blackboard in addition to curriculum reform and restructuring the university. Several eLearning tools were added or replaced, including D2L, Echo 360, Bb Collaborate, TurnItIn, and auditing of processes and data retention across systems.

Lessons learned:

- Understand how teaching & learning activities interact with university systems and determine high-level and detailed business requirements before going to tender (systems map, in context with connections between tools/platforms/data/people).

- Involve strategic-thinking people in selection process (not a simple tech decision – there are wider implications)

- Make sure requirements are written by people who have done something like this before…

- Involve the legal team from the start, so they aren’t the bottleneck at the end. cough

- Have a good statement of work outlining vendor and university roles/expectations.

- Don’t do it over the summer break! cough

- Have a business owner who is able to make initial decisions.

Program priorities (similar to what ours are):

- Time (WebCT expires – in our case, timeline driven by Bb license expiring in May 2014)

- Quality (don’t step backward, don’t screw it up)

- Scope (keep it manageable for all stakeholders)

They took a 3 semester timeline as well:

- Pilot

- Soft launch

- Cutover

DN: So, I’m feeling surprisingly good about the timeline we have, from the perspective of the technology. The biggest tech hurdle we have will be PeopleSoft integration, and that’s doable. It’s the training and user adoption that will kill this…

One Learning Environment

Greg Sabatine – UGuelph

In a way, as a new D2L campus, this was kind of a “don’t do it this way” kind of session. Guelph was the first D2L client, a decade ago, and has kind of accreted campus-wide adoption over the years. So, initial decisions didn’t scale or adapt to support additional use-cases…

They run 11 different instances of self-hosted D2L, on different upgrade schedules (based on the needs of the faculties using each instance – Medicine is on a different academic schedule than Agriculture, so servers can’t have same upgrade timeline etc…). I wonder what our D2L-hosted infrastructure will do to us along these lines – minor upgrades are apparently seamless now with no downtime, but I wonder what Major Upgrades will do

Looking at the Org Structure they use, we’ll likely have to mimic it, so that each Faculty can have some separation and control. So, our org structure would likely look like:

University > Faculty > Department > Course Offering

They did some freaky custom coding stuff to handle student logins, to point students to the appropriate Org Home Page depending on their program enrolment. Man, I hope we don’t have to do anything as funky as that…

Community of Collaboration in D2L

Barry Robinson – University System of Georgia

They use D2L with 30 out of 31 institutions in the system. Over 10,000 instructors. 120 LMS Administrators. 315,000 students across the system. Dang. That’s kinda big. They use a few groups to manage D2L and to make decisions:

- Strategic – Strategic Advisory Board – 15 reps from across the system, making the major decisions, meeting quarterly

- Strategic – Steering Committee – monthly meetings to guide implementation…

- Operational – LMS Admin Retreats – bi-annual sessions where the 120 LMS Admins get together to talk about stuff

- Tactical – LMS Admins Weekly Confernece – every Thursday at 10am, an online meeting for the admins to discuss issues etc…

Community resources:

- A D2L course for the community to use to discuss/share things.

- Listserv

- Emergency communication system

- Helpdesk tickets

- Vendor – Operational Level Guidelines

- Vendor – SLAs

They migrated 322,347 courses from Bb Vista 8, and had over 99% success rate on course migrations…

D2L for Collaboration & Quality Assurance

Mindy Lee – Centennial College

They review curriculum every 3-5 years. Previously used wikis to collect info, but then had export-to-Word-hell. Needed to transition to continuous improvement, rather than static snapshots. Now, use a D2L course shell to share info and then build a Word document to report on it after the fact.

D2L Curriculum Review course shells:

* 1 for program. common place for all courses in a program. meta.

* 1 for each course under review – used to document evidence for the report (content simply uploaded as files in the course). Serves as a community resource for instructors teaching the course – shared content, rubric, etc…

DN: This last part is actually pretty cool – the curriculum review course site is used as an active resource by instructors who later teach the course. It’s not a separate static artifact. It’s part of the course design/offering/review/refinement process. Tie this into the Course Outcomes features, and there’s a pretty killer curriculum mapping system…

Maximize LOR Functionalities

Lamar State College

DN: the D2L LOR feature is actually one of the big things we’re looking to roll out. Which is kind of funny/ironic, given my history with building learning object repositories…

They use the LOR to selectively publish content to repositories within the organization – you can set audiences for content.

Would it make sense to use a LOR for just-in-time support resources? (similar to the videos available from D2L)?

From Contract to Go-Live in 90 Days

MSU

DN: 90 days. Holy crap. Our timeline looks downright relaxed in comparison. This should be interesting…

They had permanent committees guiding decisions and implementation, and a few ad hoc committees:

- LMS Guidance (CIO etc…)

- LMS Futures (Faculty members…)

- LMS Implementation (Tech team / IT)

On migration: they encouraged faculty to “re-engineer” courses rather than to simply copy them over from the old LMS. “If a course is really simple, it’s better to just recreate it in D2L. If it’s complex, or has a LOT of content, migrate it.”

Phased launch – don’t enable every feature at first – it’s overwhelming, and places an added burden on the training and support teams. Best to stage features in phases – key Learning Environment first, then other features like Eportfolio, LOR, Analytics, etc… once you’ve gotten running.

Train the trainers first (and key überusers).

Work with early adopters – they will make or break adoption in a faculty.

They ran several organized, small configuration drop-in sessions, each focused on a specific tool or task. Don’t try to do everything in one session…

8 Months vs. 8 Weeks: Rapid LMS Implementation

Thomas Tobin – Northeastern Illinois University

First, any session that starts with a soundtrack and Egyptian-Pharoah-as-LMS-Metaphor though exercise has GOT to be good.

Much of this should be common sense, but it’s super-useful to see how it lays out in the context of an LMS implementation…

On building the list of key stakeholders – ask each stakeholder “who else should we include? why?” – don’t miss anyone. they get cranky about that.

Identify the skeptics, and recruit them.

The implementation PM must have the authority to make decisions on the fly, or the project will stall.

Develop a charter agreement – define the scope, roles, goals, etc… so people know what’s going on.

The detailed Gantt chart is not for distribution. It has too much detail, changes regularly, and will freak people out.

Plan tasks in parallel vs. serial – break things out, delegate, and let them do their jobs. Success relies on multiple people working together, not single-worker-bottlenecks.

Use a gated plan for milestones and releases – and celebrate (small) successes. (but what do you do about failures?)

Designate 1 person as “schedule checker” – doesn’t have to be the project manager (actually, may be useful to be someone else…)

Assess existing and new risks regularly.

Do a “white glove” review – review and test all settings and features. So, the ePortfolio tool is supposed to be enabled. Is it? Were permissions set so people could actually use it? Were they trained? Does it work? etc…

Unified Communications at the Univ. System of Georgia

David Disney

Interesting talk – he pointed out the importance of making sure end users have current information on the status of tools/networks/services, so they’re not left guessing. I pointed out that if people have to monitor a status website to see how things are doing, that may be a symptom of larger problems…

They have a cool website for monitoring key services across the University System of Georgia.