I’ve been battling sploggers on UCalgaryBlogs continually. I just finished marking about 50 users/blogs as spam – that’s since yesterday afternoon. I could easily stop the problem outright by requiring people to use an @ucalgary.ca email address to create a site, but that goes against the possibility of anonymity, and many (most!) students don’t use their campus email addresses.

I currently run [Bad Behavior](http://www.bad-behavior.ioerror.us/), as well as [ReCaptcha](http://www.google.com/recaptcha). They stop the automated splog creation scripts, but there seem to be a LOT of people employed around the world to manually enter forms in order to get around captcha and anti-spam/splog techniques.

In looking through the WordPress.org forum on multisite (nee WPMU) issues, I found a new post called “[Splog Spammer Final Solution?](http://wordpress.org/support/topic/splog-spammer-final-solution)” It sounded a little like overkill, but when I thought about it, almost all splogs created on UCalgaryBlogs have come from a handful of countries. Countries where it’s not very likely that students and faculty will be creating new sites from. So I decided to try it out.

The forum post [links to a page containing lists of IP addresses and blocks](http://www.countryipblocks.net/networking/top-10-global-spammers-1q-2010/) belonging to countries where splog/spam activity is off the charts. All you do is copy some text, drop it into your .htaccess file, and hey presto. No more sites created from those countries.

Initially, I just banned all access from those countries. But that felt like a pretty slimy thing to do. So I stepped back and am now only blocking access to the site creation form wp-signup.php from those countries. If anyone affiliated with the university needs to blog while traveling the world, they’re free to do so, but they’ll need to have created the blog site from outside the Spam Zones. They should be able to access their sites, post content, etc… from anywhere.

I just tested the new splog-blocking technique, and it appears to be working. I’m really curious to see if it makes a dent on new splog creation.

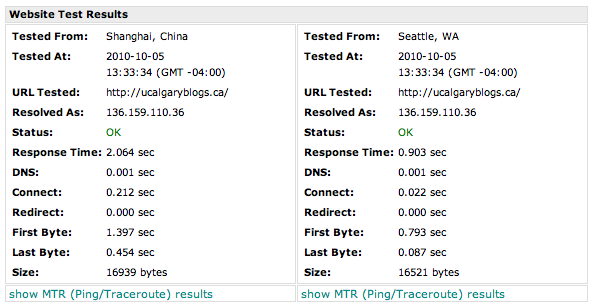

The wp-signup.php script is [blocked](http://www.websitepulse.com/help/testtools.china-test.html) in Spam Zones:

But the rest of the service is available as usual:

The .htaccess file for UCalgaryBlogs now contains this at the bottom (after the WordPress stuff):

# block the fracking evil splog spammers

order allow,deny

(the contents of the various country block files go here)

#

allow from all

**Update:** Duh. Instead of trying to blacklist IP addresses and blocks of suspected spammers, it makes more sense to whitelist IP addresses and blocks that are likely to be used by valid users trying to create sites. I’ve modified the .htaccess file to deny access to wp-signup.php to everyone but those accessing from IP addresses that don’t smell suspicious…