So Google is killing Reader:

We launched Google Reader in 2005 in an effort to make it easy for people to discover and keep tabs on their favourite websites. While the product has a loyal following, over the years usage has declined. So, on July 1, 2013, we will retire Google Reader. Users and developers interested in RSS alternatives can export their data, including their subscriptions, with Google Takeout over the course of the next four months.

Translation: Thanks for letting us mine your activity and data for a few years. We’ve decided you just don’t make enough money for us, and we’ve decided to stop using your activity to feed into our search algorithm. You are no use to us anymore. We’re killing Reader. End transmission.

Translation 2: Using a web page to read feeds is emasculating.

I’m not at all surprised by this. (remember iGoogle?)

But there is an easy way to reclaim your feed reader, so nobody can take it away from you, or cripple it, or mine your activities and data.

I switched to Fever˚ a couple of years ago, migrating all of my feeds from Google Reader. And haven’t looked back. It’s not free – it costs a whopping $30 for a license. But the licensing fee goes to support a fantastic developer, and means that there are no ads or data mining or anything skanky.

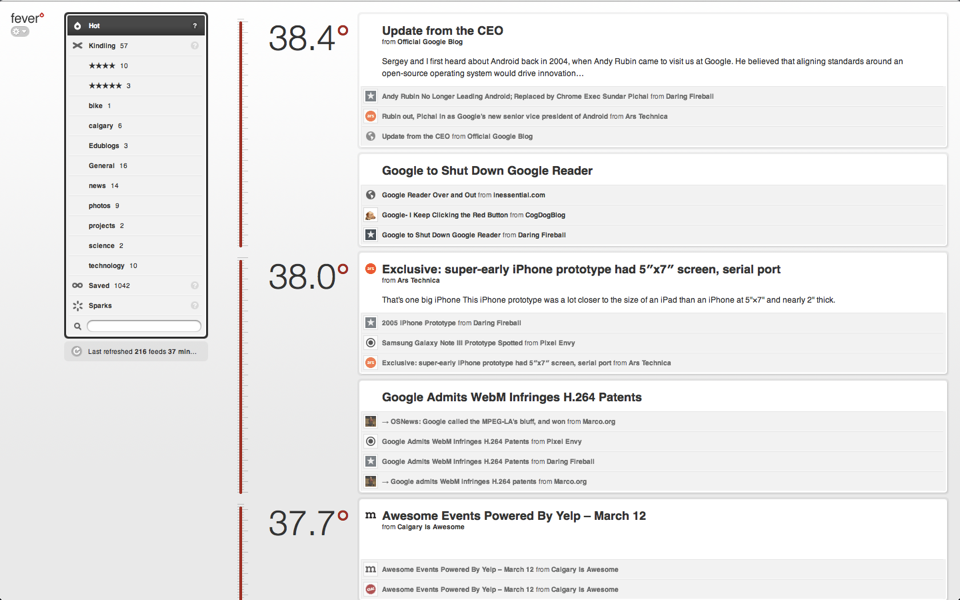

Here’s my current Fever˚ “Hot” dashboard:

Here’s my “★★★★★” folder of must-read feeds:

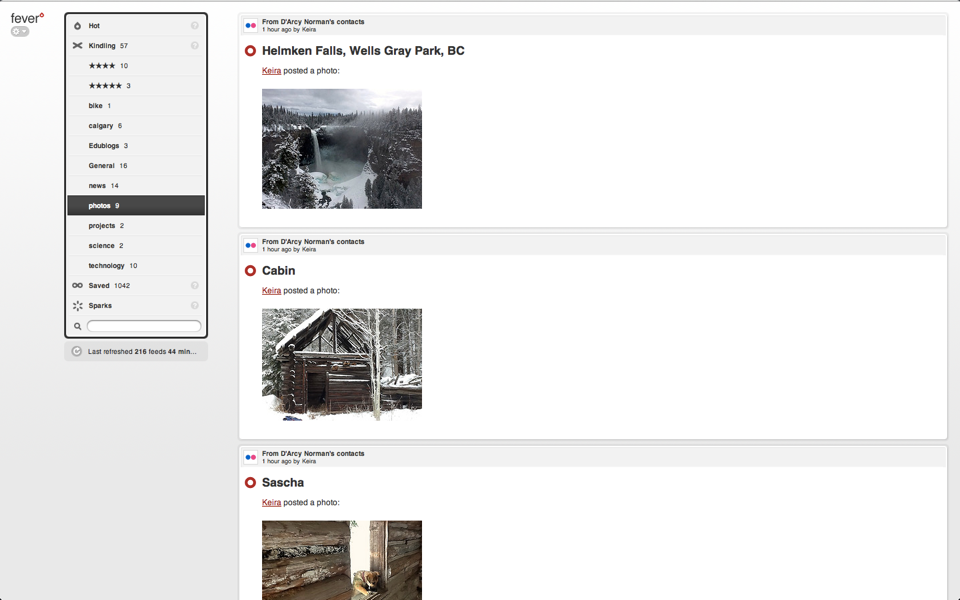

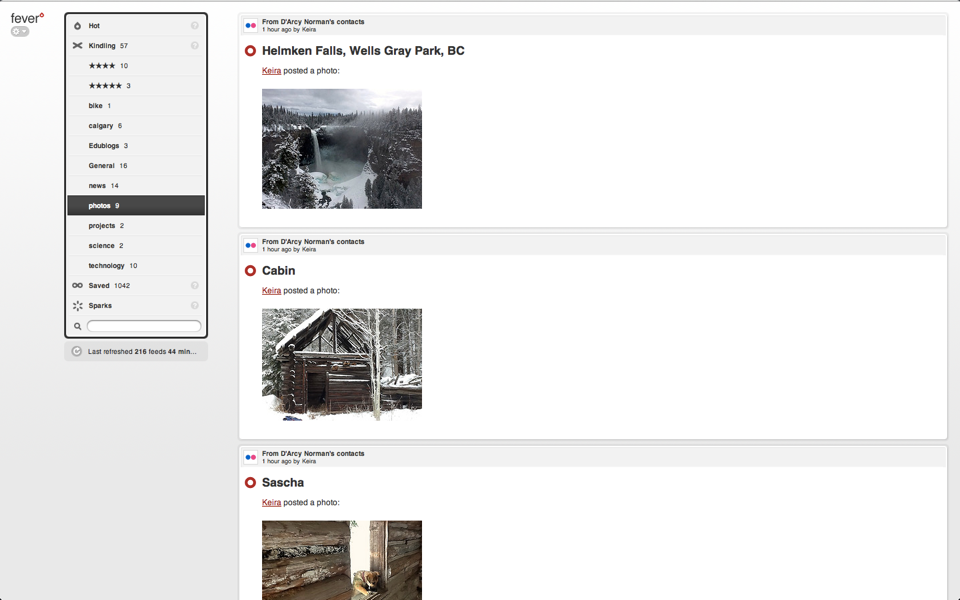

Here’s my “Photos” folder – mostly from Flickr users, but also people posting photos elsewhere. All in one handy feed display:

It’s also got a great iOS app, Reeder (which is best on the iPhone – pixel doubled on iPad for some reason).

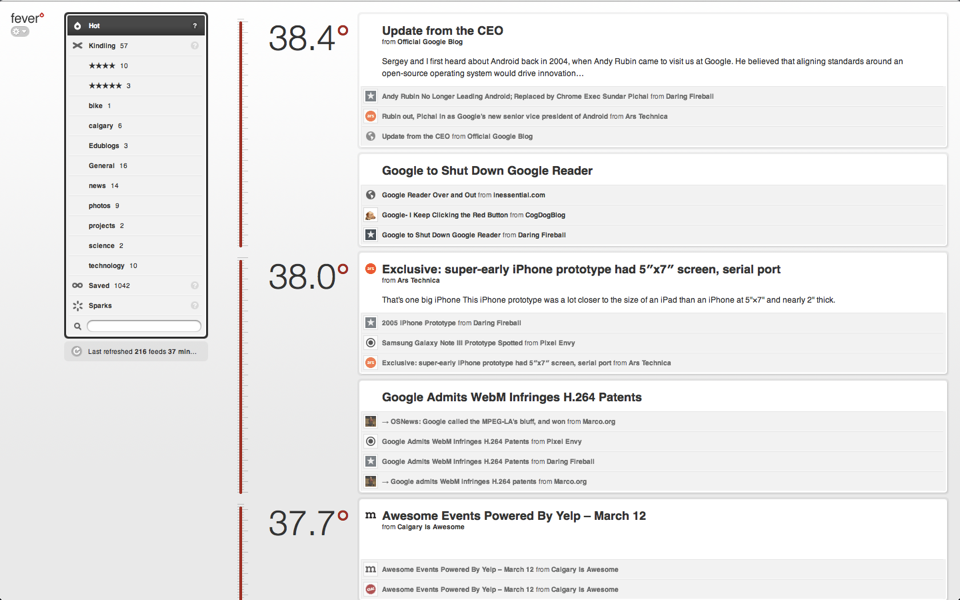

Screenshot of “hot” items in Reeder on my godphone:

And the five-star feed folder:

You can still “share” items – you can expose an RSS feed for items you star within Fever•, and – wait for it – anyone can subscribe to that feed, using any reader that hasn’t been “sunsetted” by a giant corporation. I display my “shared” items on a page on my blog, powered by a self-hosted instance of Alan’s awesome Feed2JS tool.

It’s my Fever˚. No company can decide to “sunset” it. Well, I guess Shaun can decide to abandon it, but even if that happens, the software is running on my server, so worst case scenario I don’t get updates provided by him (through the fantastic automated software updater, btw).

Anyway. Google kills Reader. Not surprising. If you’re still relying on anything Google provides, it’s now shame on you. Reclaim your stuff.